Since the debut of ChatGPT in late 2022, the landscape of technology has been transformed. This pivotal moment ignited the AI revolution, bringing to life concepts previously confined to the realm of science fiction. If you find yourself asking what AI is and how it operates, you’re in the right place. This article delves into the essence of Artificial Intelligence, its rich history, and how it functions beneath the surface.

What is AI (Artificial Intelligence)?

Artificial Intelligence, commonly known as AI, is a revolutionary technology enabling computer systems or machines to mimic human-like intelligence. This capability allows machines to simulate various human traits, such as learning, understanding, decision-making, problem-solving, and reasoning. Just like us, AI can comprehend language, analyze data, generate content, perceive its surroundings, and even perform actions.

Though AI is a branch of computer science, it is relatively young compared to established fields like physics or mathematics, having been formally established in 1956. Its development has been influenced not only by computer science but also by linguistics, neuroscience, psychology, and philosophy.

In essence, AI is focused on creating intelligent machines that can think, learn, and act like the human brain.

How Does AI Actually Work?

Unlike traditional software, which follows strict, predetermined instructions, AI systems learn and adapt based on new information. For example, a calculator operates strictly within the confines of specific syntaxes for input; its output is entirely predictable. In contrast, AI can recognize patterns in large datasets and respond to new inputs in ways it has not been directly programmed for, making it probabilistic in nature.

The core of modern AI functionality lies in machine learning. Instead of being programmed for every conceivable question or scenario, AI systems are trained using vast amounts of data—including text, images, videos, and audio. This training enables them to process new information and provide meaningful responses even to previously unseen inputs.

Imagine teaching a child to recognize dogs. Instead of detailing every dog’s traits, you simply show them various images of dogs. Over time, they learn to identify dogs independently, including those they have never encountered before. Machine learning works similarly: it learns from examples rather than explicit rules.

The most advanced form of machine learning today is deep learning, which employs neural networks. This structure is inspired by the human brain and consists of interconnected layers of nodes—similar to neurons—that process information.

A Brief History of AI Development

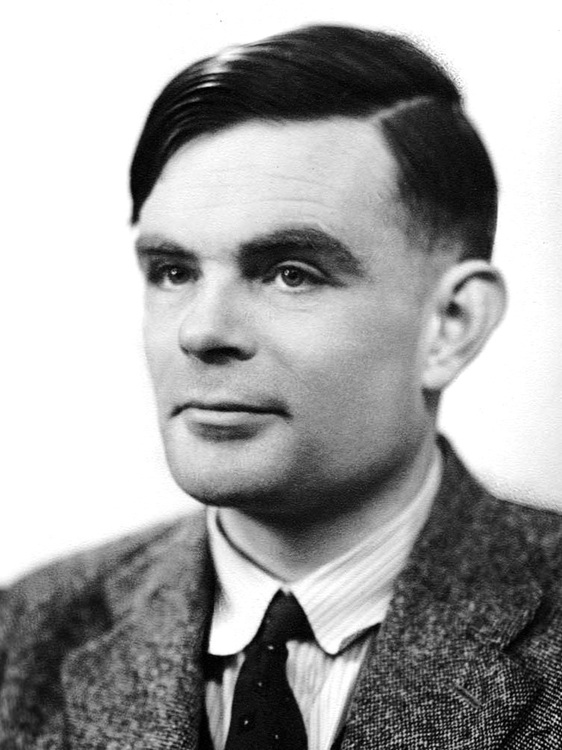

Surprisingly, the idea of AI predates the existence of computers. In 1950, British mathematician Alan Turing posed the intriguing question: Can machines think? His famous Turing Test suggested that if a machine can converse indistinguishably from a human, it may be considered intelligent. This seminal concept laid the groundwork for the field of AI.

The term “Artificial Intelligence” was officially introduced at a 1956 Dartmouth College conference, where key figures like John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon gathered to explore the potential of AI and its human-like capabilities. This event marked the formal establishment of AI as a dedicated field of study.

The following decades witnessed both enthusiasm and setbacks in AI development. Initial excitement gave way to disappointment as progress stagnated during periods known as “AI winters.” Early AI systems excelled at solving specific mathematical problems but struggled with natural language understanding and object recognition.

In the 1980s, specialized AI systems emerged for narrow tasks but lacked adaptability. However, the 2010s ushered in a renaissance fueled by three key developments: the vast availability of data generated online, powerful GPUs suitable for AI processing, and enhancements in deep learning algorithms.

The launch of AlexNet in 2012 marked a turning point by outperforming previous models in object recognition, leading to a revival in AI research. In 2017, Google introduced the groundbreaking Transformer architecture in their paper “Attention Is All You Need,” which remains foundational for many large language models, including GPT-5 and Gemini 3 Pro.

Types of AI

AI can generally be categorized into two main types: narrow AI and general AI. Narrow AI is tailored for specific tasks, such as Spotify’s music recommendation system, image detection algorithms, or chatbots like ChatGPT. These systems excel in their designated areas but cannot perform outside their limitations.

Conversely, general AI, or Artificial General Intelligence (AGI), refers to systems capable of understanding, learning, and applying knowledge broadly, mimicking human-like versatility. Although AGI still resides in the realm of fiction, organizations like OpenAI, Google DeepMind, and Anthropic are diligently working towards achieving it.

Limitations and Challenges in AI

While AI systems offer vast potential, they also face significant challenges. For starters, these systems learn from extensive datasets, which may contain inherent biases. For example, if historical hiring data reflects gender discrimination, an AI system may inadvertently perpetuate those biases. AI developers are actively working to mitigate bias by refining training datasets.

Moreover, today’s AI systems lack a human-like understanding of the world. Although they can outperform humans in games like chess and Go, they do not possess the intuitive grasp of reality that even a child has. Essentially, they lack common sense and deep understanding.

Finally, the “black box” challenge looms large. Researchers often cannot fully decipher how AI systems derive specific conclusions, raising critical questions about their internal processes as we assign them greater responsibilities and decision-making power.

What is the future of AI? The ongoing research and advancements promise a landscape where AI can integrate even more seamlessly into everyday life.

Can AI learn on its own after initial training? AI learns autonomously through machine learning. Once trained on specific datasets, it can adapt and generalize from new information without direct programming for each scenario.

How is AI impacting businesses today? Businesses across industries are harnessing AI to enhance customer service, streamline operations, tailor marketing strategies, and improve decision-making processes, leading to increased efficiency and productivity.

What are the real-world applications of AI we see today? AI is utilized in various fields, from healthcare in diagnosing diseases to finance in algorithmic trading, customer service in chatbots, and entertainment through content recommendations.

Do AI systems require a lot of data to function effectively? Yes, large datasets are essential for training AI systems, allowing them to learn patterns and make accurate predictions or decisions based on new inputs.

As AI continues to evolve and reshape our daily lives, remaining aware of its implications and possibilities is crucial. The journey of understanding and engaging with this technology is just beginning. For more insights and to stay updated on the latest trends in technology, explore content on Moyens I/O.